Fall Diploma Ceremony

9月25日に情報科学研究科の学位記伝達式が執り行われ,M2のマイク(Mike)さんが修士号を授与されました.

卒業生からのメッセージ:

教授のご指導,それから研究室の仲間の助けには心から感謝しています. 私はこれからも研究を続けるので,仲間の学生とより一層協力していきたいです.

9月24日にオンライン開催されたNEC User Group Meetingで、滝沢教授が第2世代SX-Aurora TSUBASAの性能評価について発表しました。

タイトルはEarly evaluation of the 2nd generation SX-Aurora TSUBASAです。

この第2世代SX-Aurora TSUBASAは、新しく10月1日からサイバーサイエンスセンターで稼働する「スーパーコンピュータAOBA」でも使われています。

https://www.ss.cc.tohoku.ac.jp/

性能評価結果は、第2世代SX-Aurora TSUBASAがメモリ律速の計算科学計算を効率的に実行できることを示しています。

スーパーコンピュータAOBAの稼働開始が楽しみです。

Prof. Takziawa gave a talk about performance evaluation of the second-generation SX-AUrora TSUBASA at NEC User Group Meeting on September 24.

The title is “Early Evaluation of the 2nd-generation SX-Aurora TSUBASA.”

The second-generation SX-Aurora TSUBASA is used in Supercomputer AOBA at the Cyberscience Center, which will start operation on October 1.

https://www.ss.cc.tohoku.ac.jp/

The performance evaluation results demonstrate that the second-generation SX-Aurora TSUBASA can efficiently execute memory-bound scientific computations.

We are looking forward to the operation start of Supercomputer AOBA.

2020年7月31日15時をもって、SX-ACEを基幹とするサイバーサイエンスセンター大規模科学計算システムが、 システム更新のため運用を停止しました。

旧システムは2015年2月から運用が開始され、全国の研究者に利用される中で、 数多くの成果を生み出してきました。

さて、突然ですがここで間違い探しです。

導入当時(上)と停止前(下)のSX-ACE、何が違うでしょう…?

正解は、「半透明の仕切り」が加わったことでした!

SX-ACEは前面から吸気した冷たい空気によって冷やされ、暖まった空気を背面から排気するのですが、

それらを仕切りで分けることによって、冷却効率向上を図っていました。

運用する中でも、性能向上のための工夫がされてきたのですね!

現在、SX-Aurora TSUBASAを基幹とする新システムへの入れ替えが行われており、 2020年10月の運用開始予定です。

初の試みである愛称募集の結果も含めて、楽しみですね。

大規模科学計算システムによる研究成果リストはこちら https://www.ss.cc.tohoku.ac.jp/report/index.html

こんにちは,M1の石塚です.

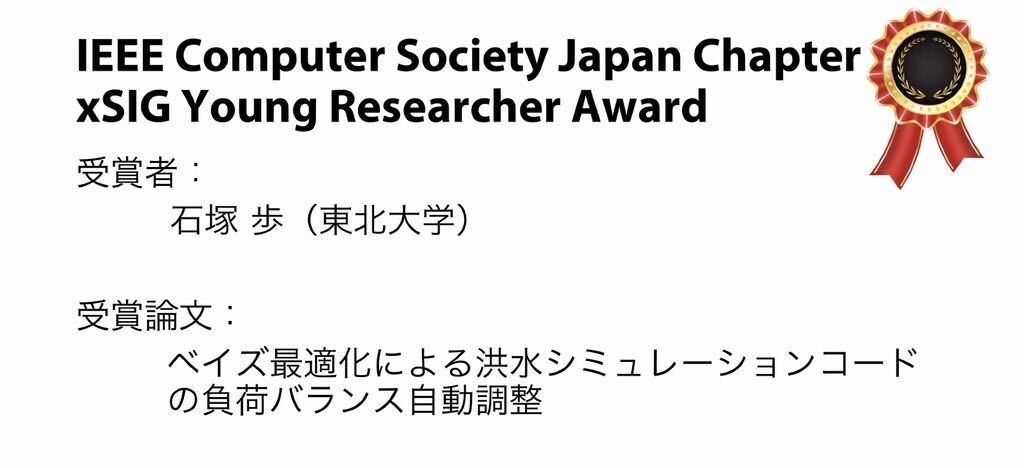

この度,7月29日から31日にかけて開催されたSWoPP 2020 (2020年並列/分散/協調処理に関する「福井」サマー・ワークショップ)にて発表を行い,IEEE Computer Society Japan Chapter xSIG Young Researcher Awardを受賞しました.

xSIG (cross-disciplinary workshop on computing Systems, Infrastructures, and programminG)が今年からSWoPPと共同開催となったため,このような形での受賞となりました.

昨今の事情により初のオンライン開催となり,福井には行けませんでしたが,チャットを用いた意見交換やZoomでの表彰式など,大変充実した会議になりました.

オンライン発表には研究室から臨みましたが,何人かが応援に駆けつけてくれたため,心を落ち着けて発表することができました.

日頃から研究活動を支えてくれている研究室の皆さんに,改めて感謝申し上げます.

ベイズ最適化による洪水シミュレーションコードの負荷バランス自動調整

石塚 歩,山下 毅,江川 隆輔,滝沢 寛之,山本 道,風間 聡

The SWoPP 2020 will be held on July 29-31 2020. M1 student Ishizuka got the IEEE Computer Society Japan Chapter xSIG Young Researcher Award at this conference.

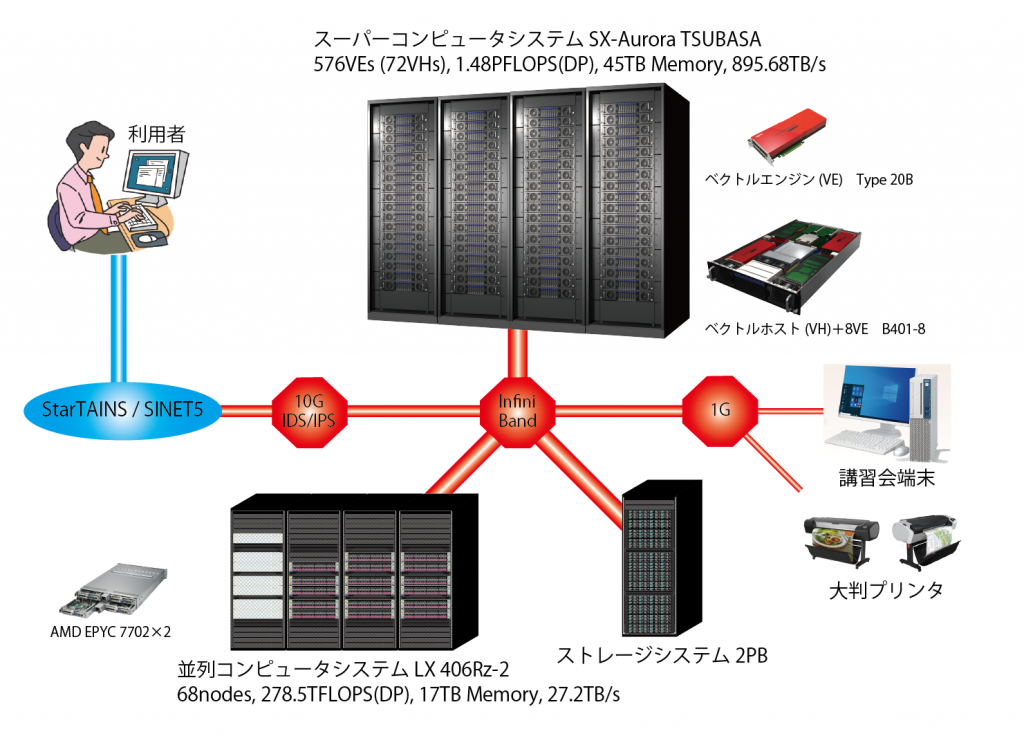

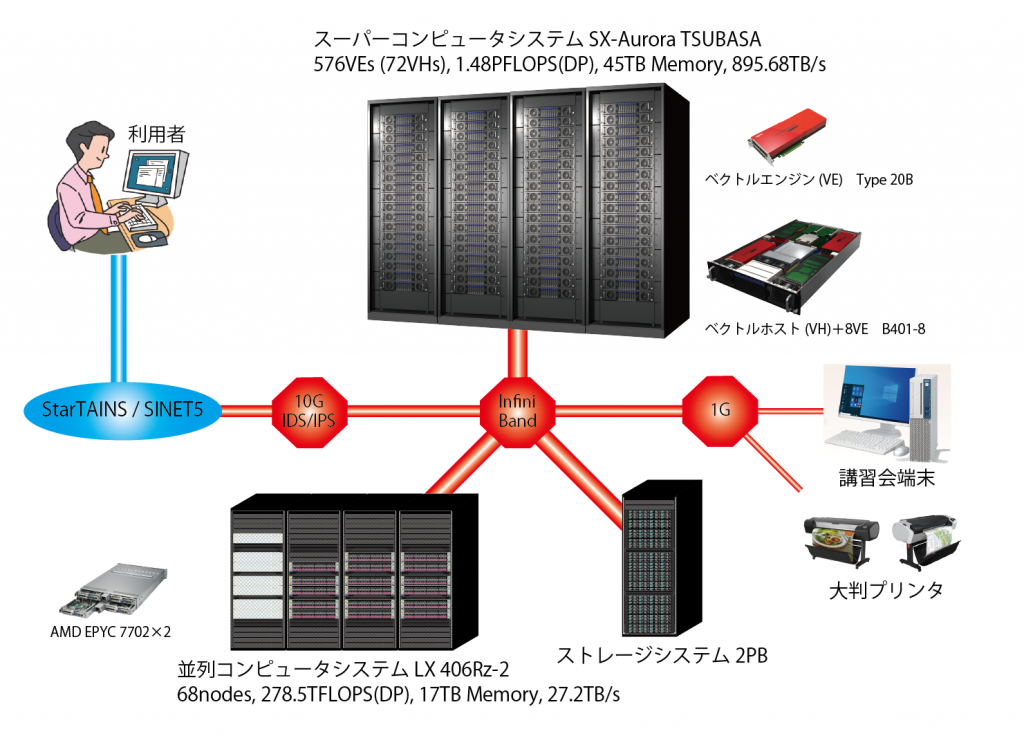

研究室があるサイバーサイエンスセンターに, 新しいスパコンシステムが導入されることになりました.

運用開始は令和2年10月を予定しています.

性能について

https://www.cc.tohoku.ac.jp/NEWS/newcomputer/new-supercomputer-2020.html

また, この新しいスパコンシステムの愛称が一般公募されますので, ご興味のある方は奮ってご応募ください.

応募締切は7月31日17:00となっています.

詳しくは以下のURLへ.

https://www.cc.tohoku.ac.jp/news.html?url=https://www.cc.tohoku.ac.jp/NEWS/nickname/nickname-supercomputer.html

Cyberscience Center’s new supercomputer system will be in service from Oct. 2020.

Performance information:

https://www.cc.tohoku.ac.jp/NEWS/newcomputer/new-supercomputer-2020.html

The Tohoku University is soliciting the nickname for this new system from the public.

If you are interested, please check the details at the following link:

(Please be reminded that the deadline for submission of proposals is on the 31st of July)

https://www.cc.tohoku.ac.jp/news.html?url=https://www.cc.tohoku.ac.jp/NEWS/nickname/nickname-supercomputer.html