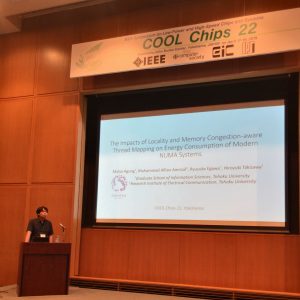

An international conference, IEEE Symposium on Low-Power and High-Speed Chips and Systems (COOL Chips 22) was held in Yokohama on April 17-19, 2019.

3rd-year Ph.D. student Mulya Agung had a presentation on “The Impacts of Locality and Memory Congestion-aware Thread Mapping on Energy Consumption of Modern NUMA Systems”.

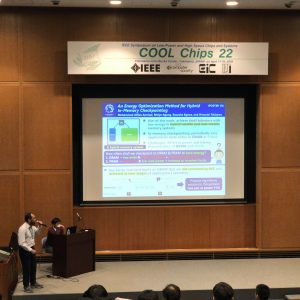

Researcher Muhammad Alfian Amrizal had a presentation on “An Energy Optimization Method for Hybrid In-Memory Checkpointing”. Furthermore, he got the Best Poster Award at this conference.

Presentation by Agung(left figure) and presentation by Alfian(right figure)

Alfian was introducing his paper(left figure) and he got the best poster award(right figure)

New group photo uploaded (2019)

All of the labmates took a new group photo today, at Cyberscience Center.

Recently, more and more students are choosing to join our lab.

Let’s enjoy our life in this lab and research together!

The kickoff seminar for the coming semester was held

At the beginning of this new semester, our labmates had a kickoff seminar. Eating the pizza ordered by professors, everyone had a brief self-introduction.

At the end of this seminar, prof. Takizawa had a presentation on the topic of “Life in the lab”.

Hope everyone could enjoy his/her life in this lab, as well as in this university.

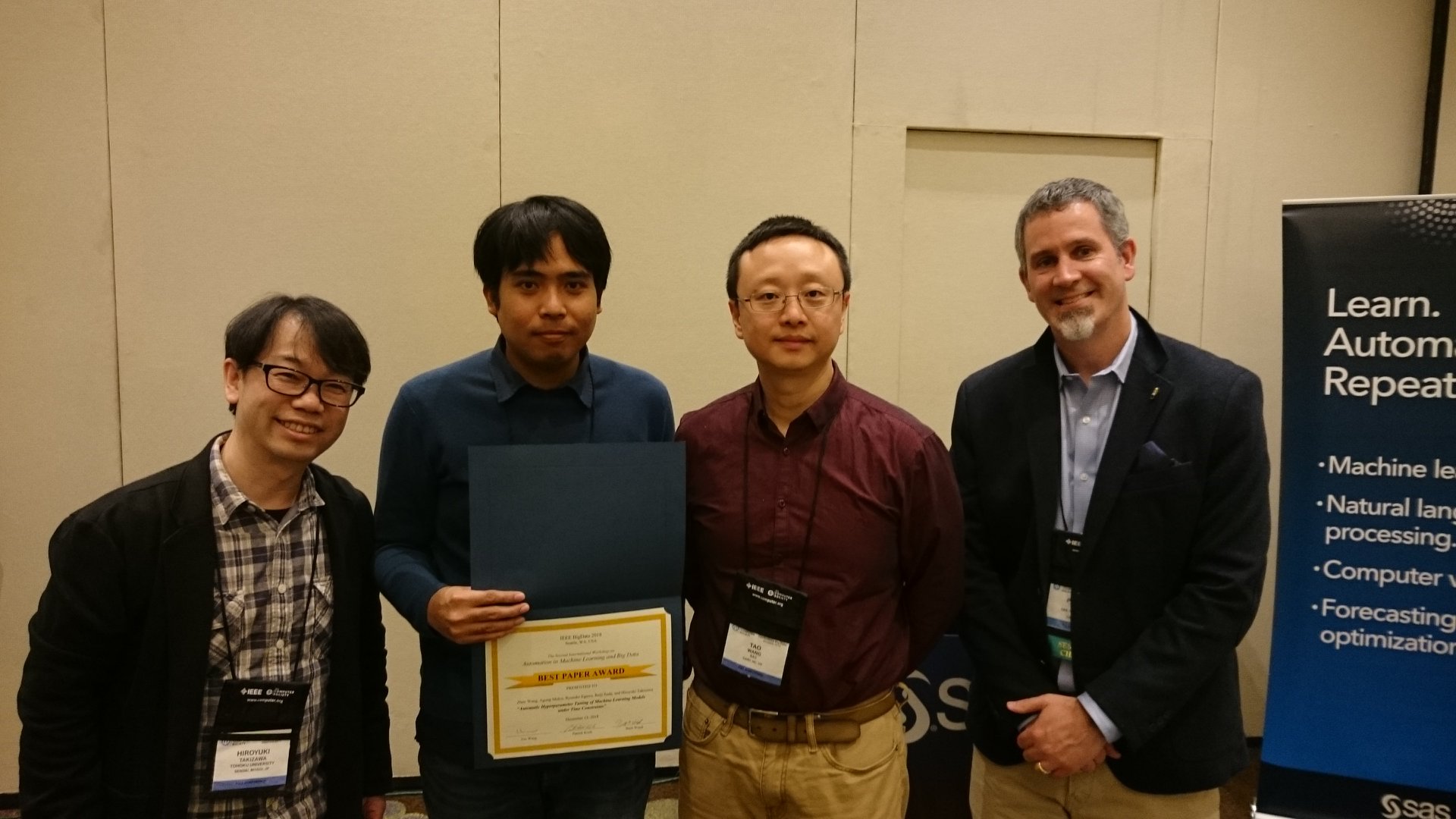

Best Paper Award!! (AutoML2018)

Booth Exhibition @ SC18 Dallas

Our group exhibited our research activities at SC 18 with IFS(Institute of Fluid Science) and IMR (Institute of Material Research)!Thank you for visiting our Booth.

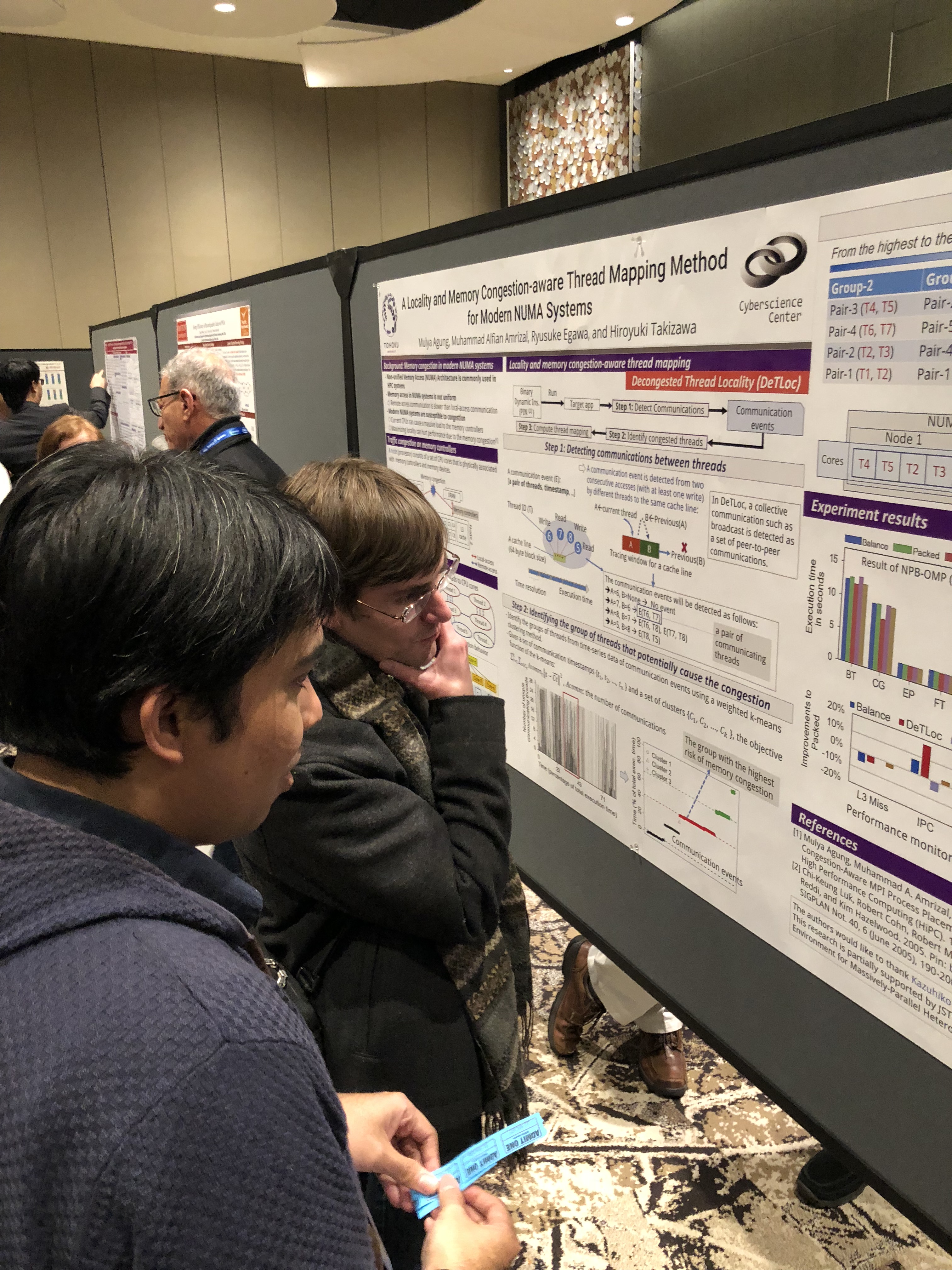

Poster presentation @ SC18

Dr. Keita Teranishi will visit our lab on Nov 22!

Dr. Keita Teranishi will visit our lab and give a talk on Nov 22.

He is a principal member of technical staff at Sandia National Laboratories, California, USA. He received the BS and MS degrees from the University of Tennessee, Knoxville, in 1998 and 2000, respectively, and the PhD degree from The Pennsylvania State University, in 2004. His research interests are parallel programming model, fault tolerance, numerical algorithm and data analytics for high performance computing systems.

The abstract of his talk is as follows.

Abstract: Tensors have found utility in a wide range of applications, such as chemometrics, network traffic analysis, neuroscience, and signal processing. Many of these data science applications have increasingly large amounts of data to process and require high-performance methods to provide a reasonable turnaround time for analysts. Sparse tensor decomposition is a tool that allows analysts to explore a compact representation (low-rank models) of high-dimensional data sets, expose patterns that may not be apparent in the raw data, and extract useful information from the large amount of initial data. In this work, we consider decomposition of sparse count data using CANDECOMP-PARAFAC Alternating Poisson Regression (CP-APR).

Unlike the Alternating Least Square (ALS) version, CP-APR algorithm involves non-trivial constraint optimization of nonlinear and nonconvex function, which contributes to the slow adaptation to high performance computing (HPC) systems. The recent studies by Kolda et al. suggest multiple variants of CP-APR algorithms amenable to data and task parallelism together, but their parallel implementation involves several challenges due to the continuing trend toward a wide variety HPC system architecture and its programming models.

To this end, we have implemented a production-quality sparse tensor decomposition code, named SparTen, in C++ using Kokkos as a hardware abstraction layer. By using Kokkos, we have been able to develop a single code base and achieve good performance on each architecture. Additionally, SparTen is templated on several data types that allow for the use of mixed precision to allow the user to tune performance and accuracy for specific applications. In this presentation, we will use SparTen as a case study to document the performance gains, performance/accuracy tradeoffs of mixed precision in this application, development effort, and discuss the level of performance portability achieved. Performance profiling results from each of these architectures will be shared to highlight difficulties of efficiently processing sparse, unstructured data. By combining these results with an analysis of each hardware architecture, we will discuss some insights for improved use of the available cache hierarchy, potential costs/benefits of analyzing the underlying sparsity pattern of the input data as a preprocessing step, critical aspects of these hardware architectures that allow for improved performance in sparse tensor applications, and where remaining performance may still have been left on the table due to having single algorithm implementations on diverging hardware architectures.

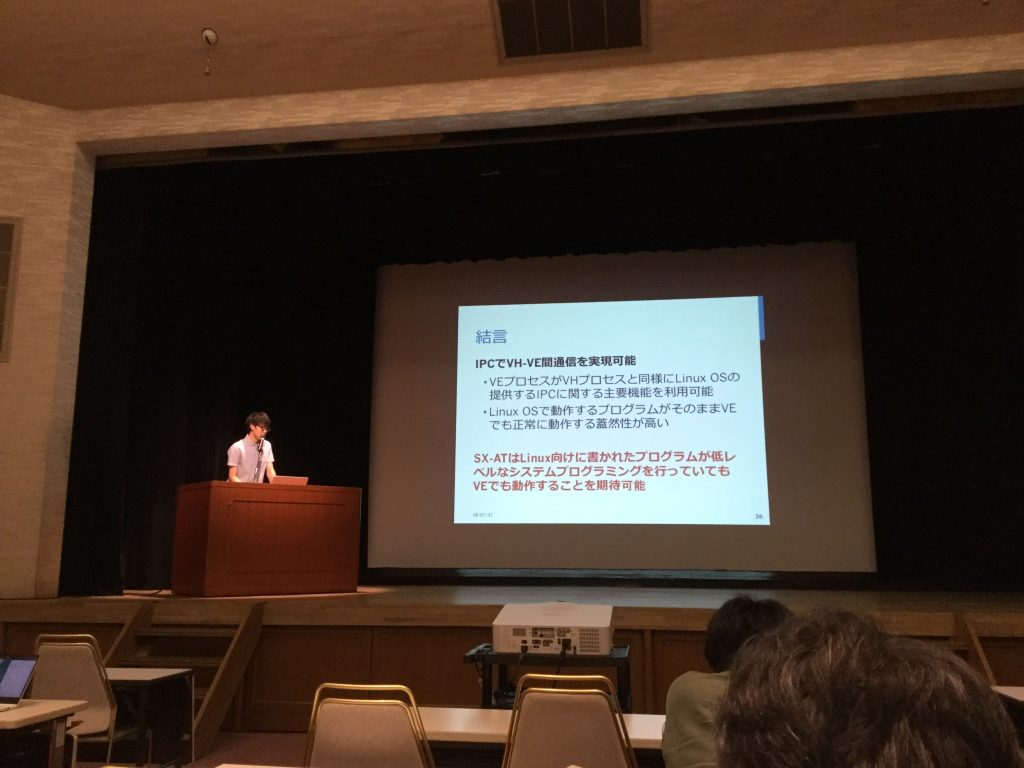

M1 student Shiotsuki presented at SWoPP2018

M1 student Shiotsuki made presentations at SWoPP2018 (Summer United Workshops on Parallel, Distributed and Cooperative Processing) held at 熊本市国際交流会館 from July 30th to August 1st.

SWoPP2018:

https://sites.google.com/site/swoppweb/swopp2018

He made a presentation on “Performance evaluation of inter-process communication of SX-Aurora TSUBASA”.

Presentation at SX-Aurora TSUBASA Forum

I gave a talk at SX-Aurora TSUBASA Forum taken place at the NEC headquarter.

My talk was about a hot topic, the performance and functionality of NEC’s new product, SX-Aurora TSUBASA.

I am glad the audiences enjoyed it.

https://jpn.nec.com/event/180727aurora/index.html (in Japanese)

Presentation at iWAPT2018

I attended IPDPS 2018 in Vancouver, and gave a talk at the international workshop on automatic performance tuning, iWAPT 2018, on behalf of Yuki Kawarabatake who was the first author of the work. During the stay, I also visited Stanley Park, a great park full of nature. It’s a pity I am not good at taking a selfie… 🙁

Hiro